There are many rerankers to choose from, which one works best for real-world applications? We decided to find out.

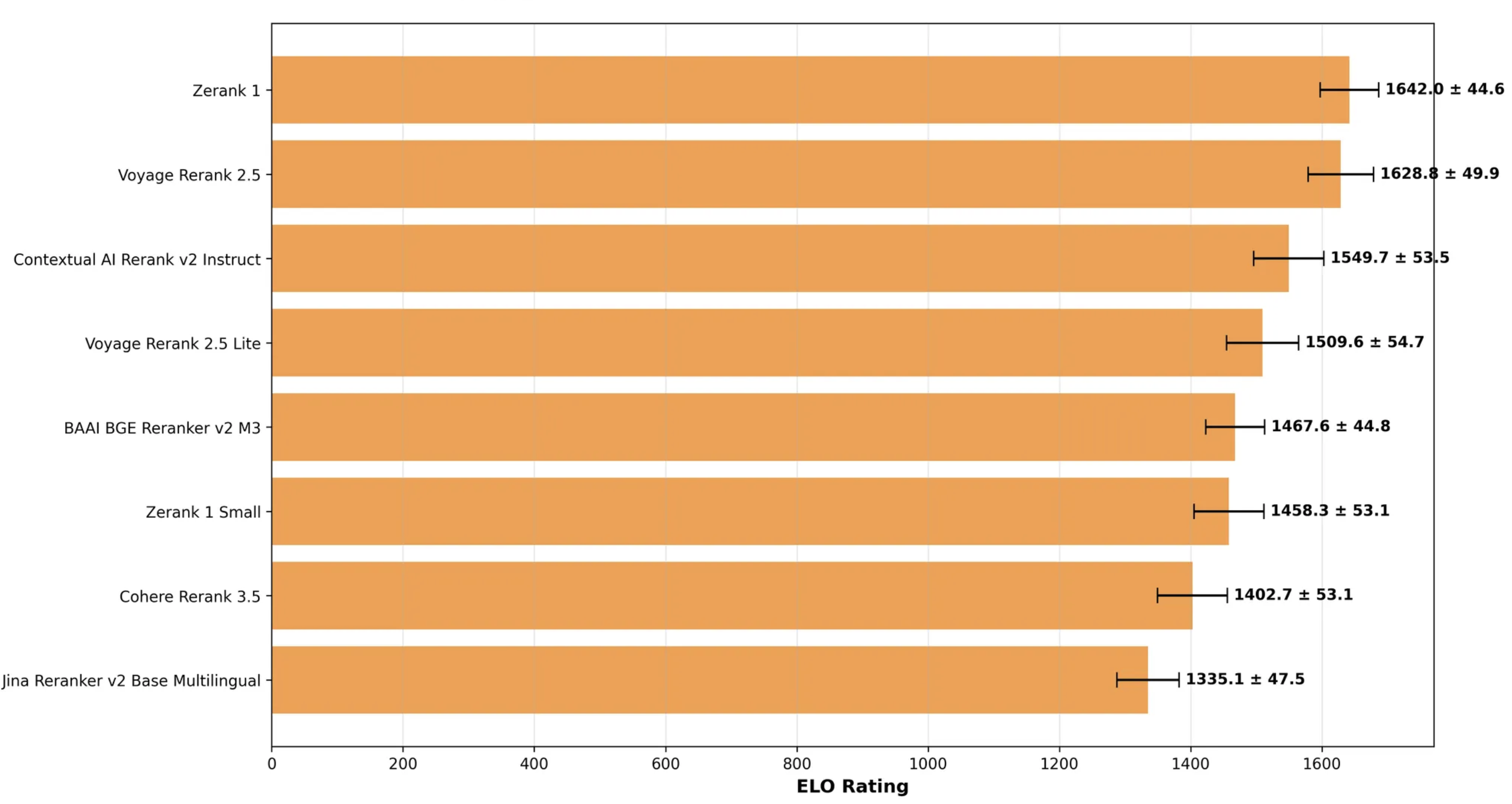

To do that, we built our own benchmark comparing eight leading rerankers under identical conditions, evaluating them by speed, accuracy, and LLM-judged elo score.

Context

Each reranker ran on the same setup - BGE-small-en-v1.5 embeddings, FAISS (top-50) retrieval, and six datasets - with performance tracked by latency, nDCG, Recall.

To measure perceived relevance, we used ELO scoring. For every query, GPT-5 compared the top-5 results from two rerankers (shown anonymously) and selected the list it found more relevant. Each win or loss adjusted their ELO score, showing which models were consistently preferred by the LLM.

What We Found

Among all tested models, Zerank-1 achieved the highest ELO score, leading the overall benchmark. Voyage Rerank 2.5 followed closely, offering similar quality at about 2× lower latency - making it the most balanced choice for production use where speed + quality = high performance.

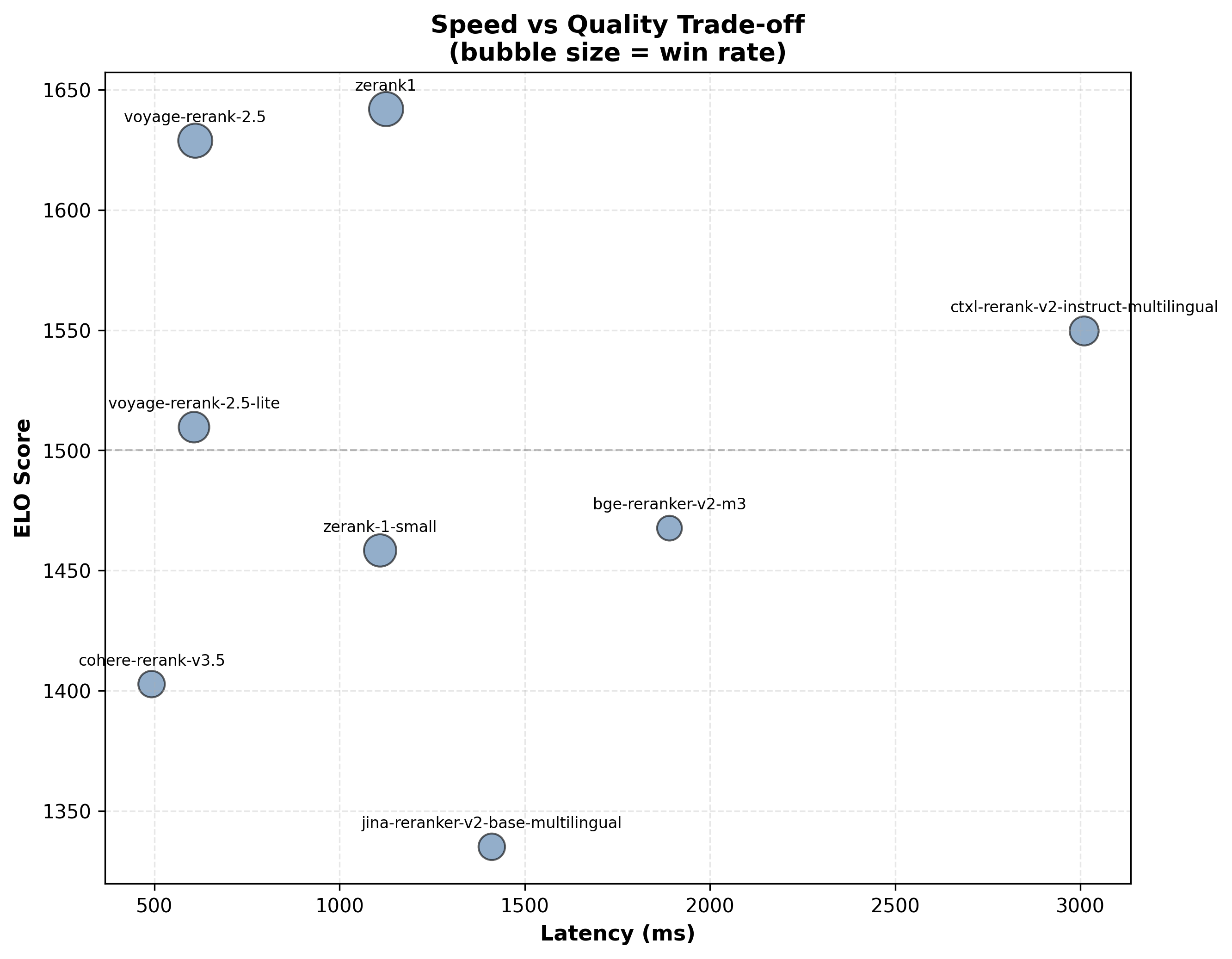

Speed vs Quality

To understand how other models compare on this balance, we visualized the trade-off between speed and quality.

Each bubble represents a reranker's win rate in head-to-head comparisons. Cohere v3.5 was the fastest, but less favored by the LLM judge. Voyage 2.5 hit the ideal middle ground, combining strong accuracy with low latency, the practical sweet spot for RAG pipelines.

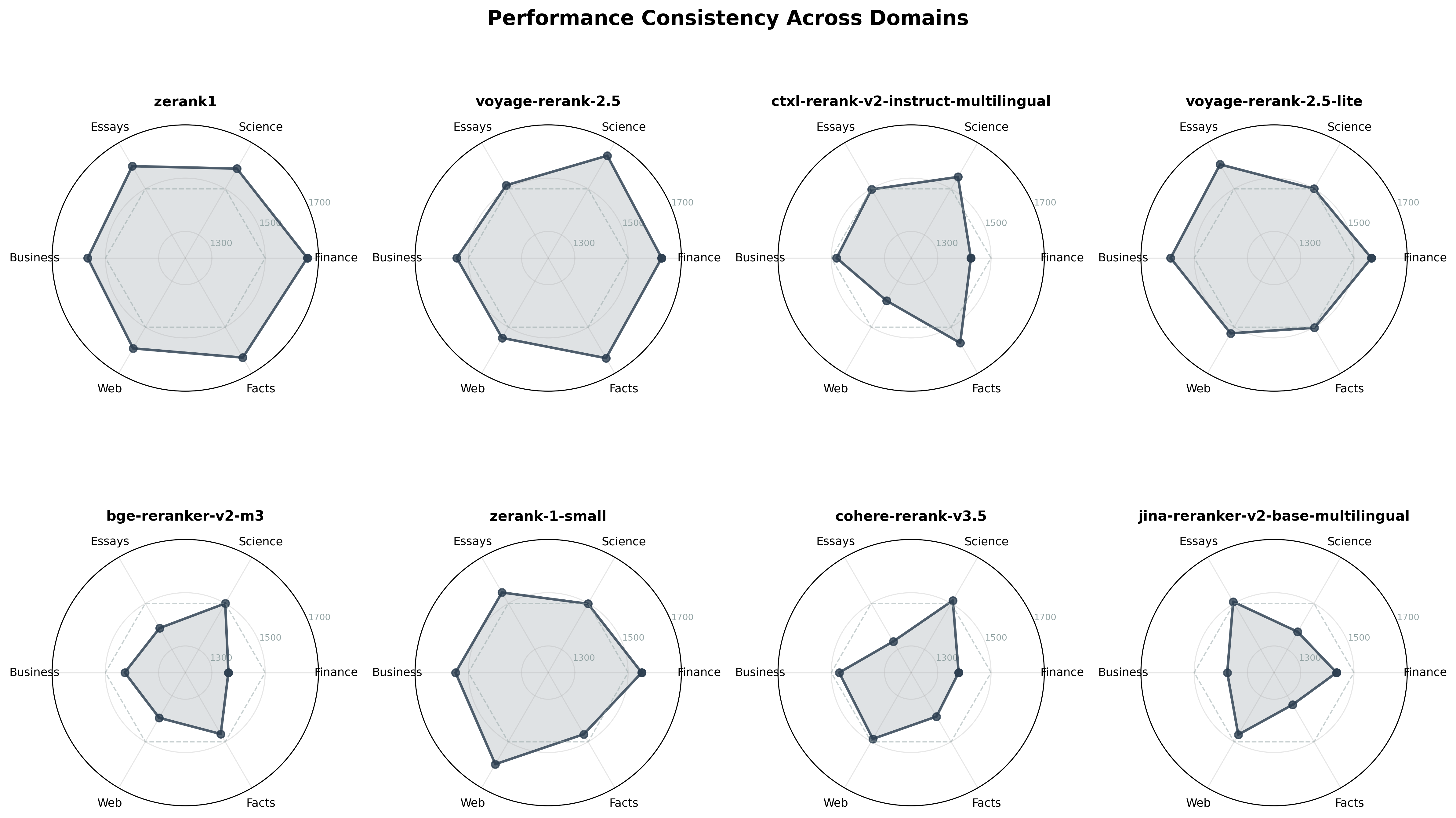

Consistency

However, high performance isn’t just about speed or accuracy — it’s also about consistency. So, how stable are rerankers across different types of data? To see this, we visualized their ELO scores on six datasets — finance, business, essays, web, facts, and science.

Rounded radar shapes indicate consistent performance, while sharp spikes reveal strengths in some areas and weaknesses in others.

Here, Zerank-1 and Voyage Rerank 2.5 stand out with smooth, wide coverage - they handle every domain well, proving to be reliable generalists. In contrast, CTXL-Rerank v2 peaks in science and facts but dips in web and finance, showing it's more specialized than stable. Models like Cohere v3.5 and BGE v2-M3 show even sharper spikes, performing well only in select cases.

Takeaway

Across accuracy, speed, and consistency, Zerank-1 comes out strongest in relevance, while Voyage Rerank 2.5 offers the best balance between quality and latency. Models like CTXL-Rerank v2, Cohere v3.5, and BGE v2-M3 also perform well in specific areas, making them suitable options for more targeted use cases.

Overall, the results show how rerankers differ - some lead in accuracy, others in speed, and a few manage to balance both.

What’s Next

This benchmark is just the start. We’ll keep expanding it with new rerankers and track where each one excels or falls short.

You can explore the full results on our Leaderboard Page or check out the GitHub repo to see how the evaluation pipeline works.